🎉 You make my life so fun-fetti

Late to the Party 🎉 is about insights into real-world AI without the hype.

Hello internet,

it’s my birthday! Let’s enjoy some cake… err… machine learning!

The Latest Fashion

- Huggingface Transformers can already serve Meta’s LLama 2!

- Thousands of authors sign a letter urging AI makers to stop stealing books.

- Interesting ways to think about the consciousness of (digital) minds.

Got this from a friend? Subscribe here!

My Current Obsession

It’s my birthday today! 🥳🎉

So I’ll be enjoying the weekend after I send this and maybe explore nature a bit and have good food. My friends are mostly scattered around the world, so I can’t really have a party, but I already played Sea of Thieves tonight, pirating around virtually with some friends, which was lovely. Just a weekend of self-care and things that tickle my brain! If you have any good suggestions for what you love to do, let me know. I’m all open for inspiration!

My new chatGPT course was featured on the cover of the productivity part of Skillshare, which is pretty awesome!

Actually, let me celebrate with a few of you. This link gives the first 10 of you free lifetime access to the course! Skillshare is a bit sneaky and asks for your credit card anyways, but you should be able to skip that screen and access the short course anyways! Hope you enjoy it! Always nice to give something away on your special day!

Thing I Like

I caved and bought an air conditioner, and since temperatures are rising to new highs, my dysregulated neurodivergent body thanks me for it!

Hot off the Press

I posted a new Tiktok!

I open-sourced and published my PhD as a website, and it’s going fairly viral on Linkedin!

Then I told people about leave one feature out-importances, which I shared with you 9 months ago. Good callback and absolutely a library to keep in your stack.

And I loved 3blue1brown’s video about convolutions so much, I shared it again!

In Case You Missed It

My article about choosing a laptop for machine learning is going currently the most popular thing I wrote on my website!

Machine Learning Insights

Last week I asked, Describe a good system to manage neural network configurations!, and here’s the gist of it:

Managing neural network configurations is crucial for efficiently experimenting with different model settings and hyperparameters. Hydra is a fantastic tool that can significantly simplify this process and make managing configurations more organized and convenient.

Hydra is a configuration management framework that allows researchers and developers to create hierarchical configurations for their machine learning projects. It provides a flexible and user-friendly way to define and override configurations for experiments, making it easier to test various settings without modifying the code.

Let's explore an excellent system to manage neural network configurations using Hydra:

- Configuration Hierarchies: Hydra allows you to create configuration files hierarchically. You can have a base configuration file with common settings shared across all experiments. Then, you can define specific experiment configurations that inherit from the base file and override only the necessary parameters. This helps maintain consistency while enabling easy customization for different experiments.

- Parameter Sweeping: Hydra supports parameter sweeping, where you can specify multiple values for hyperparameters, and it will automatically generate combinations to run experiments. This feature helps you explore a wide range of hyperparameter values efficiently, making it easier to find optimal configurations.

- Command-Line Overrides: Hydra allows you to override configuration parameters through the command line. This means you can easily modify specific settings without editing the configuration files directly. It's handy for quick adjustments during experimentation.

- Reusable Configurations: With Hydra, you can define reusable components of your configurations. For example, you can create a set of predefined configurations for different neural network architectures or optimization algorithms. This reusability reduces duplication and promotes consistency across projects.

- Integrations with Libraries: Hydra integrates with various Python libraries, including PyTorch and TensorFlow. This makes incorporating Hydra into existing machine learning projects easy without extensive modifications.

Now, let's consider an example from meteorological data. Suppose we are working on a neural network model to predict 2m temperature based on historical weather data. Using Hydra, we can set up the configuration as follows:

- Base Configuration: Define a base configuration file with default settings for data preprocessing, learning rate, and batch size.

- Experiment-Specific Configurations: Create separate configuration files for different experiments. For example, we could have one configuration for testing various neural network architectures, another for experimenting with different learning rates, and another for exploring batch size variations.

- Parameter Sweeping: For the learning rate experiment, we could specify a range of learning rates using Hydra's parameter sweeping functionality, allowing us to test multiple learning rates in one go.

- Command-Line Overrides: Suppose we want to run a quick test with a smaller batch size. We can easily override the batch size parameter through the command line without modifying the configuration file.

By using Hydra, we can organize and manage neural network configurations effectively, enabling efficient experimentation and facilitating the exploration of different hyperparameters. And each experiment run is saved with its full config, including manual overrides. It simplifies setting up and running experiments, making it a fantastic tool for machine learning researchers and practitioners.

Data Stories

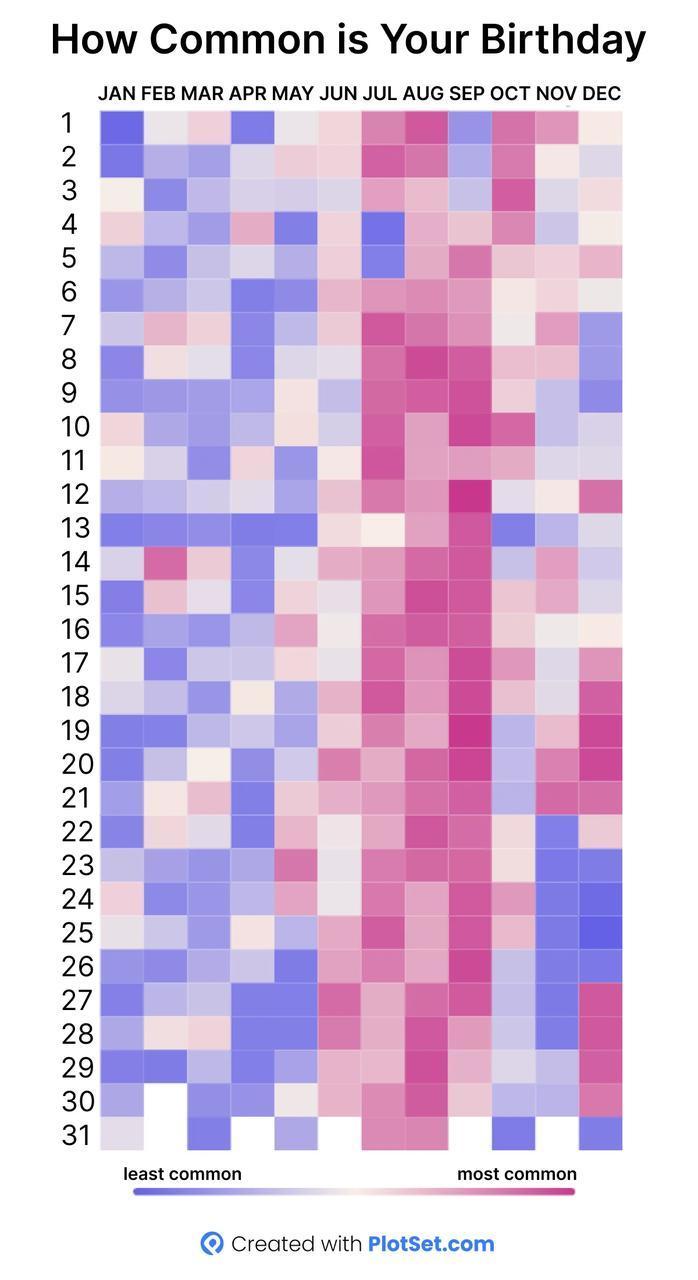

I saw this chart on Reddit, which said: “How common is your birthday?

Immediately, a few fun observations bubble up!

- The summer months see a lot of birthdays 9 months after cosy winter times.

- People seem to push birthdates until after Christmas.

- New Year’s and July 4th are a lot lower as well, given that it’s US American data.

- People don’t want to have a child on September 11

- And weirdly, there is also a low line through the 13th and on October 31st. Superstitions seem to do well!

- It seems like the November time around Thanksgiving is also lower!

Which is fun, but it also seemed off a bit.

- Is it as unlikely to have a child on January 29th as it is on February 29th, when that date only happens every 4-ish years?

- Why is April 21st as unlikely as New Year’s Day and Christmas?

And similar questions. What is the effect of different days actually, since we don’t see any numbers?

This is where a different chart comes in handy!

Actual big effects can be seen on the big national holidays: Christmas, New Year’s and July 4th.

There is also a moderate effect around the week, that is, thanksgiving.

The line around the 13th for each month is also visible, but the effect is a few hundred births at best.

And February 29 was clearly “adjusted” to fit into the yearly plot.

Looks like a case where we should check the choice of colour bars and especially choosing the midpoint on divergent colour bars!

As for my birthday? Perfectly normal, boring summer day in July!

Source: Reddit Data Is Beautiful / User Plotset

Question of the Week

- What is your top priority in making a machine learning experiment reproducible?

Post them on Mastodon and Tag me. I'd love to see what you come up with. Then I can include them in the next issue!

Tidbits from the Web

- I’ve been watching Dimension 20: The Ravening War, which was a delight

- On the topic of recommending Dropout content: Make Some Noise

- I’ve been playing a ton of chess lately. Some to procrastinate…

Add a comment: