🌳 Trees hate when the calendar turns a leaf to Sep-timber

Late to the Party 🎉 is about insights into real-world AI without the hype.

Hello internet,

The last month just flew by. Let’s gather, resettle, and start September with some awesome machine learning!

The Latest Fashion

- WeatherBench 2 is out! And for ML benchmarks, it’s very pretty to click around in!

- The AI grifters have done it. Dangerous AI-generated mushroom foraging books pop up on Amazon.

- Here’s an anti-hype LLM reading list

Got this from a friend? Subscribe here!

My Current Obsession

Dimension 20 just announced a bunch of live shows in the UK and Ireland! They’re in April 2024, and tickets are still available!

Apart from that, I’ve been struggling a bit this week. I’m still trying to figure out how to fit exercise and filming into my busy schedule. Any advice on that would be appreciated!

Realising that we’re now deep into the latter part of 2023, I am trying to recalibrate what I want to achieve this year. Let’s see what’s still in the tank.

Thing I Like

I just spent an ungodly amount of money at IKEA to improve my storage in my flat. Get yourself some “Variera” you can use to add little steps to your cupboards. Truly a space wonder.

Hot off the Press

I wrote about how machine learning changed optimisation in computational physics. A nice loop back to where a lot of machine learning ideas came from.

Here’s the long-awaited piece about my experience with using Hydra configurations for machine learning development.

In Case You Missed It

My post about cached properties in Python has been making the rounds!

On Socials

My story about talking about stochastic gradient descent at an Amazon interview was predictably popular on Linkedin. They also enjoyed my writing about reducing complexity in machine learning projects, including some lovely comments.

Mastodon loved me sharing ManimML, a 3blue1brown-style machine learning visualisation library.

I also wrote about machine learning reproducibility and that code versioning is not enough to reproduce your ML model. And a nice observation about ML engineers and ML scientists.

Python Deadlines

I just discovered that Pydata Global is back!

We have two upcoming deadlines for online conferences this week. The PyLadies Conference and the Python Conference for High-energy Physics.

Machine Learning Insights

Last week, I asked, What strategies do you employ to speed up neural network training?, and here’s the gist of it:

Speeding up neural network training is a common concern in machine learning, and I employ several strategies as a machine learning expert to achieve faster training times. These strategies help make the training process more efficient without sacrificing the quality of the model’s performance.

Here are some beginner-friendly strategies:

-

Transfer Learning: Transfer learning involves using pre-trained models as a starting point for training on a new task. Using knowledge learned from one task, we can significantly reduce the time required to train a model on a related task.

Example in Meteorology: A pre-trained model that has learned to recognise weather patterns from historical data, such as the AtmoRep foundation model, can be fine-tuned for specific weather forecasting tasks, saving training time compared to training from scratch.

-

Batch Training: Instead of updating the model’s weights after processing each data point, we group data into batches, as explained in last week’s ML Insight on stochastic gradient descent. This reduces the number of weight updates and speeds up training because it takes advantage of efficient matrix operations that can be parallelised by modern hardware.

- GPU Acceleration: Graphics Processing Units (GPUs) are specialised hardware that can perform matrix operations much faster than CPUs. Training neural networks on GPUs can significantly reduce training time. Many deep learning frameworks, like TensorFlow and PyTorch, support GPU acceleration.

- Model Pruning: Model pruning involves removing unnecessary or less critical connections or parameters from a neural network. Smaller models train faster. Techniques like weight pruning and structured pruning can reduce the size of a model without significantly impacting its performance.

- Early Stopping: Instead of training a model for a fixed number of epochs, we monitor its performance on a validation dataset during training. When the performance stops improving, we stop training early. This prevents overfitting and saves training time.

- Just-in-Time Compilation (JIT): JIT compilation involves dynamically compiling parts of the neural network code during training. This optimisation technique can significantly reduce training time by converting parts of the code into more efficient representations just before execution, like using

torch.compile(model). - External Libraries: External libraries like Huggingface Accelerate and Microsoft DeepSpeed are designed to accelerate deep learning training. They offer optimisations such as model parallelism, gradient accumulation, and memory management that can dramatically speed up training while using existing hardware.

When applied effectively, these strategies can help speed up the training of neural networks, making them more practical and efficient tools for various applications, including meteorology.

Data Stories

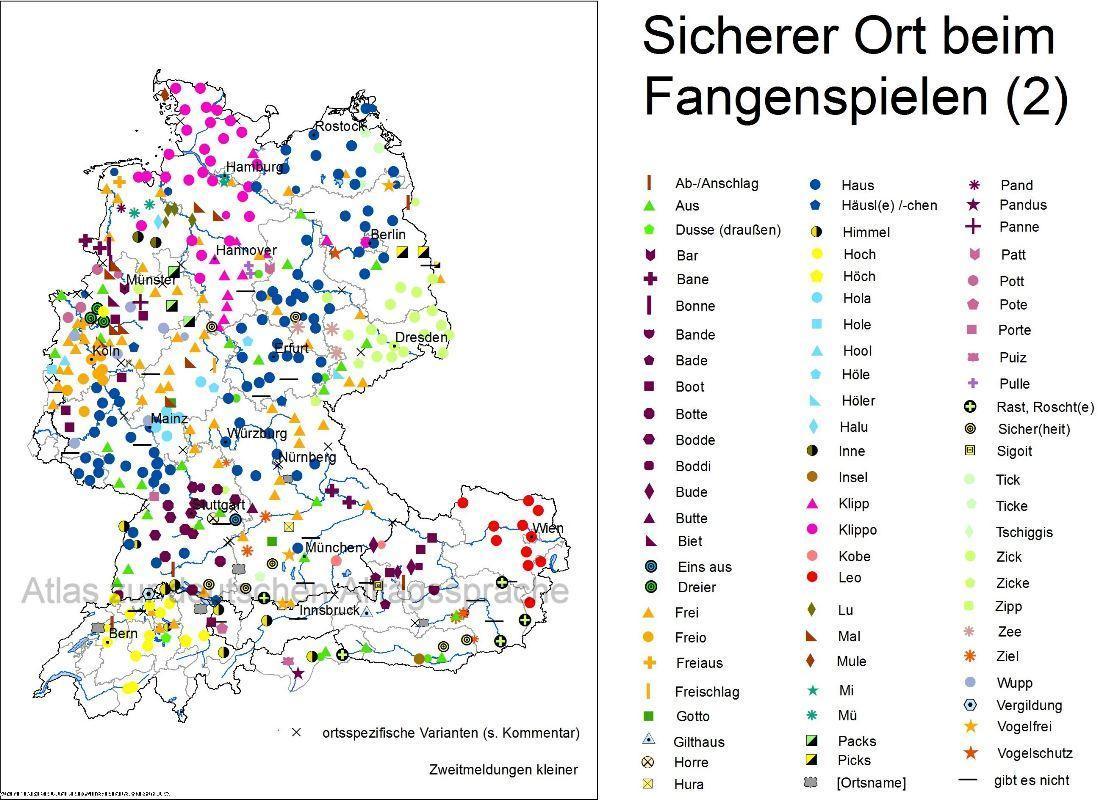

Have you ever played tag?

Sure you have. You probably remember how to declare that you’re in the safe zone.

But how?

German everyday language is known to be very diverse. When I saw this chart, it immediately brought me back to how “we used to say we’re safe from being tagged”.

“Clippo” or “Mi”.

So I was curious because clearly, I grew up in the North. The Clippo region.

But how does “Mi” play into it?

Well, I grew up close to Hamburg. The small island of, different ways to say it, in the middle of a sea of Clippo.

You may be wondering what “Clippo” and “Mi” mean. If you find out, let me know, because they mean nothing in German!

A lot of those words don’t mean a thing!

Did you have weird words to declare your tag-immunity as children?

Source: Atlas der Alltagssprache

Question of the Week

- What is a vector database, and why did they rise in popularity recently?

Post them on Mastodon and Tag me. I’d love to see what you come up with. Then, I can include them in the next issue!

Job Corner

The ECMWF is hiring five people who touch machine learning right now!

Four positions in the core team to develop a data-driven machine learning weather forecasting:

- Machine Learning Engineer: Focus on model optimisation and parallel implementations to train large machine learning models on vast datasets. Prior experience with deep learning frameworks, model optimisation, and memory footprint improvements is essential. Background in earth-system modelling is welcomed.

- Observations and Data Assimilation Expert: Interface observations with machine learning algorithms and play a vital role in data assimilation. Exceptional interpersonal skills and expertise in using earth-system observations are highly valuable for this role.

- Machine Learning Scientist for Learning from Observations: Contribute to making future earth system predictions from observation data using deep learning frameworks. Experience in earth-system observation data and data assimilation approaches is desirable.

- Machine Learning Scientist for Precipitation: Specialise in accurate precipitation predictions with generative machine learning models. Experience with GANs, VAEs, or Diffusion approaches and expertise in using neural networks for precipitation prediction are advantageous.

And one on the EU project Destination Earth

You will leverage cutting-edge machine learning techniques and statistical methods to support uncertainty quantification for weather-induced extremes in the revolutionary Destination Earth (DestinE) Digital Twin. Your work will contribute to more accurate and reliable predictions, shaping the future of weather forecasting and its impact on climate understanding and resilience. If you’re a proactive and talented individual with a passion for Earth System Science and a flair for machine learning, apply now and make a meaningful difference in tackling climate challenges.

(I like to stress that these positions are, as always, written by a committee, so if identify as part of an under-represented minority, please consider applying, even if you don’t hit every single bullet point.)

Tidbits from the Web

- Why corporate America is obsessed with Company Culture

- This fun little Tiktok about investing early in your life

- The cutest Tiktok I’ve seen all week

Add a comment: