🍧 Newspaper reporter looking for scoop at ice cream shop

Late to the Party 🎉 is about insights into real-world AI without the hype.

Hello internet,

We’re having a proper “news” issue this week! Let’s look at the machine learning news!

The Latest Fashion

- People are trying out GPT-4, and it’s pretty fun to play around with!

- This tool helps you benchmark your Pytorch DataLoaders.

- Pytorch 2.0 is the fastest Pytorch yet, and even more Pythonic (whatever that means)!

Got this from a friend? Subscribe here!

My Current Obsession

I launched The Latent Space last week and have been looking to connect with people. Today we had the first chat in the accountability circle, and it was a lovely chat with Sree about what we’re doing currently and what’s working.

I want this to be a place for deep conversation and sharing of ideas and cool finds for people that apply machine learning in the real world. I’m considering making the FourCastNet paper the first paper to discuss on the upcoming Journal Club. What do you think?

Thing I Like

My new microphone is an SM7B and it sounds incredibly clear and fantastic. Can’t wait to create content with it.

Machine Learning Insights

Last week I asked, What is the kernel trick and why is it so impactful?, and here’s the gist of it:

The kernel trick is a mathematical technique that helps us solve complex machine learning problems. It allows us to transform data from one dimension to a higher dimension, making it easier to classify or analyze. This transformation is performed using a kernel function that calculates the similarity between two data points in the high-dimensional space.

A classic example is a binary classification, where class B encircles class A in a 2D graph. When we transform the data with a bell shape, we can elevate the inner class A over class B in a 3D space. Now we can simply fit a linear classifier instead of also fitting a non-linearity that still has to be found. Much easier search space!

To illustrate this, let’s take the example of predicting the strength of hurricanes. To predict the strength of a hurricane, we need to analyze several factors, like wind speed, pressure, temperature, and humidity. These factors can be represented as data points in a graph. However, it is difficult to predict the strength of a hurricane based on just these factors.

With the kernel trick, we can transform this data to a higher dimension, where the relationship between the factors becomes more apparent. For example, we could use a kernel function that calculates the distance between two data points based on their temperature, humidity, and wind speed using an exponential. This elevates large values, which could be relevant for hurricanes. You can see that creating these kernels is an easy way to infuse expert knowledge into machine learning systems. Generally, this also helps us to better understand the relationship between these factors and predict the strength of a hurricane more accurately.

In conclusion, the kernel trick is a powerful technique that allows us to solve complex machine learning problems by transforming data to a higher dimension. It helps us to better understand the relationship between different factors and make more accurate predictions.

Data Stories

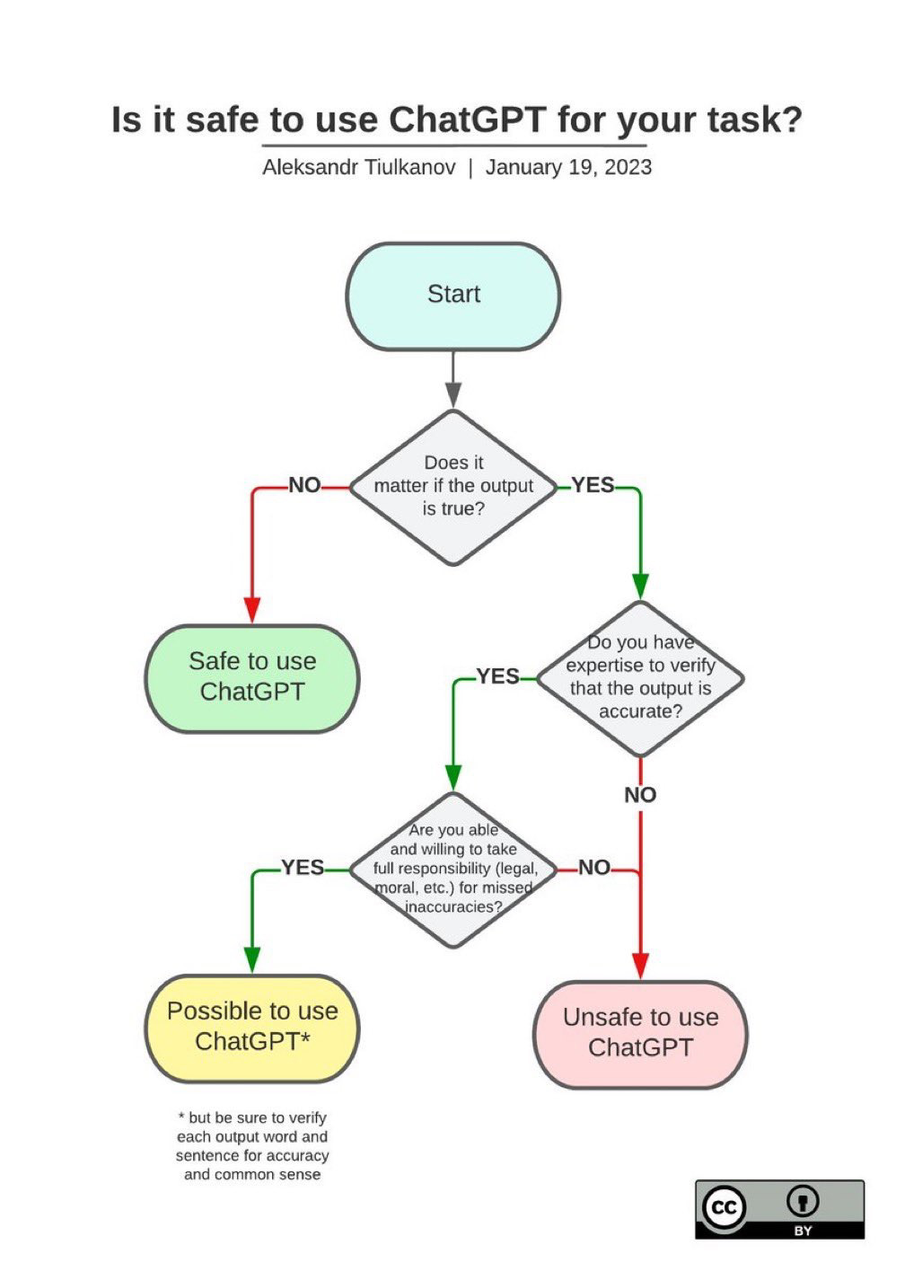

How could I now share this lovely flowchart, now that GPT-4 has come out?

A lovely thought-provoking guide on when you’re save to use chatGPT.

Source: Aleksandr Tiulkanov

Question of the Week

- What is imbalanced data, and what are its implications?

Post them on Mastodon and Tag me. I’d love to see what you come up with. Then I can include them in the next issue!

Tidbits from the Web

- How to fight Climate Anxiety for when the state of the world has you down

- A nice collection of small teams that achieved big things

- An analysis of why smart people write bad

Add a comment: