🦖 My costume this year was pure dino-mite

Late to the Party 🎉 is about insights into real-world AI without the hype.

Hello internet,

did you enjoy your Halloween celebrations? Let’s recover from all that facepaint with some machine learning!

The Latest Fashion

- Google wrote a blog post about running MetNet for weather prediction operationally

- The right to be forgotten means we need machine unlearning, this saliency approach seems promising

- I love the idea of caption upsampling

Got this from a friend? Subscribe here!

My Current Obsession

I’m working on enjoying fall more actively, so I went and decorated for Halloween and autumn in general. For Halloween, my colleagues organised a visit to the bowling alley, and I wore my Triceratops costume that I posted on the Latent Space. Super fun but incredibly difficult to do any sports in.

I unlocked the LinkedIn Top Voice in machine learning this week as well. I think it’s probably just a vanity thing, but I like having it, so that’s nice!

I’m getting ready to give a talk this month in the Netherlands about running machine learning in weather operationally. That will be fun!

Thing I Like

It HAS to be my dinosaur costume for Halloween, right?!

Hot off the Press

I wrote a story about how we saved a $60,000 contract by balancing our classes. This was inspired by our last newsletter issue!

In Case You Missed It

My post about Laptops for machine learning is currently really popular again.

On Socials

I adapted the story about balancing classes for Linkedin and Mastodon, and we had lovely discussions and a wide reception!

I shared PlotNeuralNet with Linkedin, which you saw 14 months ago already!

And I think my post about my open PhD thesis was shared somewhere again because it just reached 250 reactions.

Python Deadlines

PyCon has flipped the switch on their website, and the call for proposals is live!

Machine Learning Insights

Last week I asked, How do you ensure machine learning models don’t over-predict rare events? and here’s the gist of it:

Preventing machine learning models from over-predicting rare events is crucial to ensure the accuracy of your predictions.

In meteorology, this can be particularly important when forecasting rare and extreme weather events, such as hurricanes or tornadoes. Here are some practical ways to tackle this challenge in simple terms:

- Balanced Sampling:

- What it means: Ensure that your dataset includes a representative number of both rare and common events. This helps the model learn to distinguish between them. You can resample data, as we discussed in the last issue, to make the signal learnable but maintain some of the imbalance.

- Meteorological Example: In your weather dataset, if you’re predicting rare events like tornadoes, make sure you have a sufficient number of tornado instances to train your model effectively.

- Use Evaluation Metrics:

- What it means: Choose evaluation metrics that are suitable for imbalanced datasets. Metrics like the F1-score or weighted accuracy are more informative than simple accuracy.

- Meteorological Example: Rather than solely looking at how often your model was correct, evaluate how well it correctly predicts extreme weather events.

- Adjust Decision Threshold:

- What it means: You can change the threshold for classifying an event. By increasing the threshold, you make predictions more conservative.

- Meteorological Example: If your model predicts tornadoes when it’s even slightly uncertain, you can raise the threshold so that it only predicts tornadoes when it’s highly confident, reducing false alarms. But be aware that the Receiver-Operating-Curve (ROC) is sensitive to class imbalances, use the Precision-Recall-Curve instead.

- Cost-Sensitive Learning:

- What it means: Assign different costs to prediction errors. For rare events, the cost of missing them (false negatives) is usually higher than the cost of false alarms. This was also the most common comment on my posts about class imbalances!

- Meteorological Example: Missing a tornado warning can be far more costly than a false alarm, so you assign a higher cost to false negatives in your model. This works particularly well on binary predictions but can be extended to more complicated weightings.

- Feature Engineering:

- What it means: Create new features or modify existing ones to help the model better understand the characteristics of rare events.

- Meteorological Example: You might engineer features that capture specific atmospheric conditions associated with extreme weather, making it easier for the model to identify rare events.

- Collect More Data:

- What it means: If possible, gather more data on rare events to improve the model’s prediction capabilities.

- Meteorological Example: To predict rare weather phenomena more accurately, you might collaborate with meteorological agencies to obtain additional data on past rare events, like wildfires.

- Continuous Model Monitoring:

- What it means: Keep an eye on how your model performs in real-world scenarios and make adjustments as necessary. I write about model drift and monitoring probability shifts in the ebook you all have.

- Meteorological Example: Continuously monitor your weather prediction model, and if it starts over-predicting rare events, adjust its parameters or thresholds accordingly.

- Model Calibration:

- What it means: Calibration is the process of fine-tuning the predicted probabilities from your model to align with the actual likelihood of an event occurring. It’s particularly useful for models that produce probability scores. Then, we can train a model with rebalanced classes to achieve a signal and recalibrate the model's predictions.

- Meteorological Example: Suppose your weather prediction model provides a probability score for the likelihood of a tropical storm. You can use calibration techniques to ensure that a predicted probability of, say, 20% corresponds to an actual 20% chance of a tornado, reducing overconfident predictions.

By applying these approaches, you can help ensure that your machine learning model strikes the right balance between being alert to rare events and avoiding excessive over-predictions, which is particularly important in the context of extreme weather forecasting.

Data Stories

It’s time we explain LLMs to laypeople!

But often, we need technical language and nice animations that scientists usually don’t have the skills to create.

Luckily, specialised journalists, science communicators, animators and web developers have experience taking complex topics and breaking them down into bite-sized chunks.

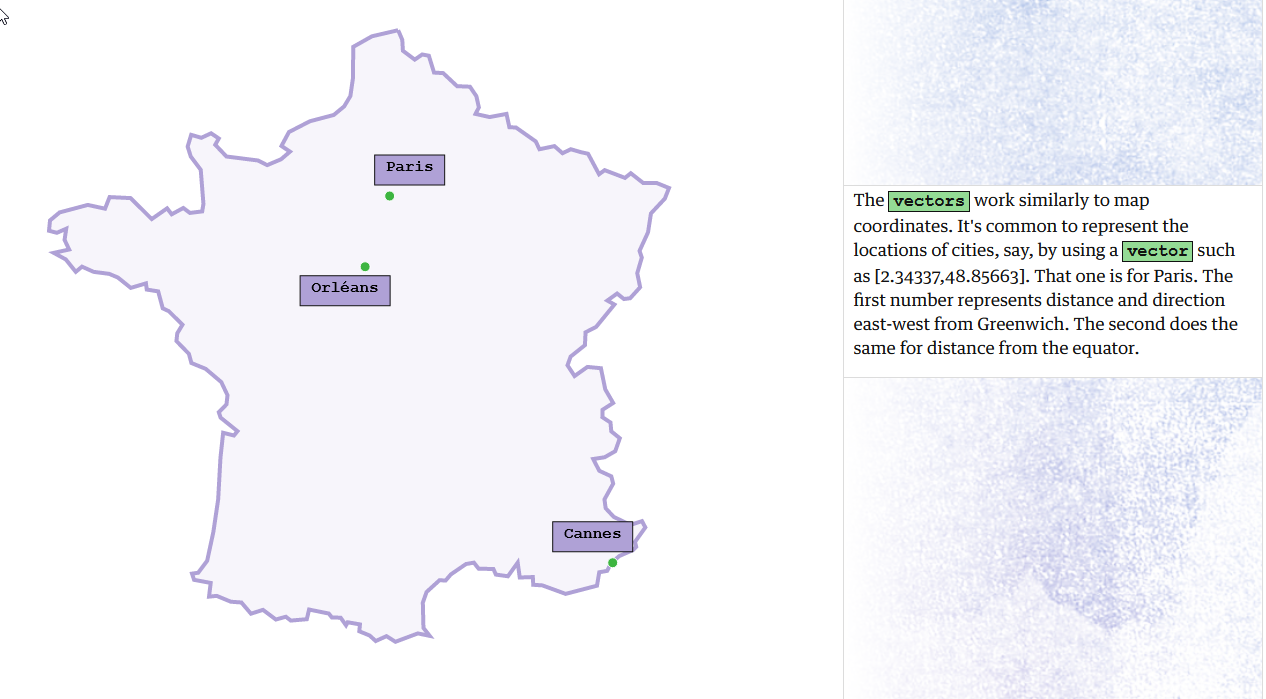

Journalists at The Guardian have now made a beautiful scrollsplainer.

I really liked the step-wise explanation that gives people an intuition about the technology behind chatGPT.

Check it out on The Guardian

Question of the Week

- What’s your favourite ML library, and why is it XGBoost?

Post them on Mastodon and Tag me. I’d love to see what you come up with. Then I can include them in the next issue!

Tidbits from the Web

- This commentary on a deer somehow had me cackling

- They saved half a million dollars in a tale of corporate frustration

- These stunts in the water are very fun and impressive

Add a comment: