🔝 It's weird Python was invented in the Netherlands, since it's above C-level

Late to the Party 🎉 is about insights into real-world AI without the hype.

Hello internet,

Where’d that week go already? Time flies when you’re having fun. Let’s look at some machine learning and a surprise appearance of Python!

The Latest Fashion

- Meta just published code-Llama

- You can now run Python in Excel natively

- How do you explain gradient boosting?

Got this from a friend? Subscribe here!

My Current Obsession

On Tuesday, we watched the Barbie movie, and I thought it was tons of fun. The ending was a bit weak, but overall, it was delightful! I loved all the references to other movies, the general cinematic work and, of course, the feminist vibes! Highly recommend it.

I’m still obsessed with the Daily Meeze by Marie Poulin. It’s been working really well so far, but of course, I used it a bit to push myself, which I need to be careful with. I have days where I plan downtime, but the guilt of not filming anything for YouTube or TikTok is always creeping in. If I ever find a way of filming easier, I’ll probably just add another thing to my task list. But generally, it would still be nice to create videos regularly. Creating things people care about would be even nicer, but that’s a whole different problem. But at least we’re experimenting now!

Thing I Like

I’m still playing around with automations on my light switches and power sources. I was hesitant to put my computer on a Wi-Fi switch but decided to build some careful pipelines around it. Now, the power shuts off when standby is detected, and it turns on in the morning when I start making my coffee to get ready.

Hot off the Press

I wrote a blog post about my confusion about this very simple concept: auto-regressive models. I also wrote about improving the output from chatGPT.

In Case You Missed It

My fellow ADHDers and Notion connoisseurs have been enjoying my post on task sequences in Notion to-do lists again.

On Socials

I wrote about the differences between ML scientists and ML engineers on Linkedin.

Mastodon liked the Pyflow library.

I also wrote about validating chatGPT output, a little story about learning auto-regressive models, and the Notion AI was quite popular.

Python Deadlines

I found a new Python deadline for Python Pizza and XtremePython, but I’m not sure about the deadline for XtremePython.

Also, the deadline for PyCon Sweden is fast approaching, and so is the DjangoDay Copenhagen deadline.

Machine Learning Insights

Last week, I asked, Could you explain the concept of gradient descent and its significance in optimising machine learning models?, and here’s the gist of it:

Gradient descent is a fundamental optimisation algorithm used in machine learning to find the optimal values of parameters for a given model. Imagine you’re standing on a hilly landscape, and your goal is to reach the lowest point, corresponding to the minimum of a function. Gradient descent helps you find the path that leads you downhill most efficiently.

In the context of machine learning, the “hilly landscape” is represented by a mathematical function that measures how well a model’s predictions match the actual data. The goal is to minimise this function, often called a “loss” or “cost” function. Gradient descent works by iteratively adjusting the model’s parameters in the direction that decreases the loss function the most.

Significance in Optimising Models: Gradient descent is a crucial optimisation technique in machine learning for several reasons:

- Efficient Parameter Updates: Gradient descent helps models find optimal parameter values by moving them in small steps in the direction that decreases the loss function. This allows the model to converge to the best possible configuration efficiently.

- Handling Complex Models: Many machine learning models have millions, even billions of parameters, and manually finding the best values can be extremely challenging or even impossible. Gradient descent automates this process and scales well to complex models as long as they’re differentiable.

- Flexibility: Gradient descent can be applied to various machine learning algorithms, including linear regression, neural networks, and support vector machines. This makes it a versatile optimisation technique.

- Adaptability to Data: As the model learns from data, the gradient (direction of steepest ascent) of the loss function guides how to update the parameters to minimise the error. This adaptability enables models to improve with experience.

- Composability: In addition to classic gradient descent and its stochastic sibling, gradient descent has many ways it can be modified to improve convergence and stabilise training, such as weight decay, learning rates, and momentum.

Example from Meteorological Data: Suppose we’re building a machine learning model based on historical weather data to predict tomorrow’s temperature. How well its predictions match the actual temperatures determines the model’s performance. Gradient descent helps us adjust the model’s internal “weights” and “biases” (parameters) in a way that minimises the difference between predicted and actual temperatures. As the model iteratively updates these parameters using gradient descent, it becomes better at making accurate temperature predictions.

In summary, gradient descent is a powerful optimisation technique in machine learning. It guides models to find optimal parameter values by iteratively adjusting them in the direction that minimises a loss function. This algorithm significantly enhances the accuracy and effectiveness of machine learning models across various domains, including meteorology.

Data Stories

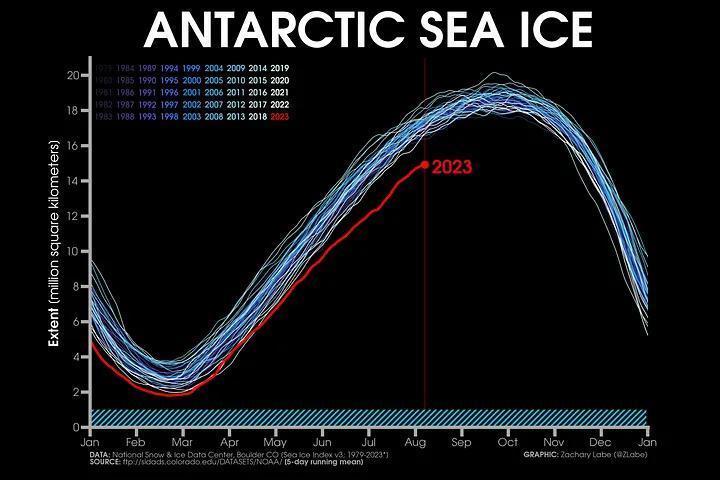

I think Spaghetti plots are a wonderful way to show the distribution of time series.

Here, we can see the arctic sea ice per year, which beautifully shows their seasonality.

But we can also see how anomalous 2023 is in this series.

That’s by design.

These plots are called spaghetti plots disparagingly because it’s impossible to follow any of these lines individually. So you have to put in the work to make these plots convey any information.

Below, we can see how one line is highlighted to specifically show its anomalous behaviour.

The blog post in the source discusses how to make these data visualisations work.

Re-posted by Lee Vaughan

Question of the Week

- What strategies do you employ to speed up neural network training?

Post them on Mastodon and Tag me. I’d love to see what you come up with. Then, I can include them in the next issue!

Job Corner

The ECMWF is hiring five people who touch machine learning right now!

Four positions in the core team to develop a data-driven machine learning weather forecasting:

- Machine Learning Engineer: Focus on model optimisation and parallel implementations to train large machine learning models on vast datasets. Prior experience with deep learning frameworks, model optimisation, and memory footprint improvements is essential. Background in earth-system modelling is welcomed.

- Observations and Data Assimilation Expert: Interface observations with machine learning algorithms and play a vital role in data assimilation. Exceptional interpersonal skills and expertise in using earth-system observations are highly valuable for this role.

- Machine Learning Scientist for Learning from Observations: Contribute to making future earth system predictions from observation data using deep learning frameworks. Experience in earth-system observation data and data assimilation approaches is desirable.

- Machine Learning Scientist for Precipitation: Specialise in accurate precipitation predictions with generative machine learning models. Experience with GANs, VAEs, or Diffusion approaches is advantageous, along with expertise in using neural networks for precipitation prediction.

And one on the EU project Destination Earth

You will leverage cutting-edge machine learning techniques and statistical methods to support uncertainty quantification for weather-induced extremes in the revolutionary Destination Earth (DestinE) Digital Twin. Your work will contribute to more accurate and reliable predictions, shaping the future of weather forecasting and its impact on climate understanding and resilience. If you’re a proactive and talented individual with a passion for Earth System Science and a flair for machine learning, apply now and make a meaningful difference in tackling climate challenges.

(I like to stress that these positions are, as always, written by a committee, so if identify as part of an under-represented minority, please consider applying, even if you don’t hit every single bullet point.)

Tidbits from the Web

- AI bots are now so good at solving Captchas, they beat humans…

- This video protecting goats from hurting each other is adorable

- This PyCon keynote helps you deal better with people

Add a comment: