🐸 Are frogs happier because they eat what bugs them?

This edition covers AutoDiff puzzles, an AI startup, a ML Journal Club, bias-variance balance, Barcelona streets, and creative musical short videos.

Late to the Party 🎉 is about insights into real-world AI without the hype.

Hello internet,

I’m back! I had a case of the anxieties last week, so I missed the issue. Apologies.

In this issue, we’ll look at AutoDiff puzzles, an AI for that and a startup by the scikit-learn founders. The Latent Space is starting a Journal Club with the Rolnick paper. We’ll also discuss balancing bias and variance in small datasets. Then we’ll look at the streets of Barcelona and have many lovely creative musical short videos to round it off.

Let’s dive right in!

The Latest Fashion

- Never search for AI Apps again with “There’s an AI for That”

- The folks around scikit-learn founded a startup to promote open-source ML: :probabl

- If you want to learn about automatic differentiation, here’s a puzzle colab notebook!

Worried these links might be sponsored? Fret no more. They’re all organic, as per my ethics.

My Current Obsession

We’re getting a Journal Club in the Latent Space!

Dan Rishea reached out to start organising the inaugural event in the coming week. We’ll discuss this paper:

Tackling Climate Change with Machine Learning (D. Rolnick et al. 2022)

- Why? The topic is something of collective experience, and the article itself is aimed at a wide audience, from researchers to local governments. It details the applicability of different models to the challenges put forward by climate change.

https://dl.acm.org/doi/pdf/10.1145/3485128

We’re currently finding a suitable time slot, so make sure to join the community to cast your vote!

Hot off the Press

I got featured by ECMWF, showcasing the ML team and some of our vacancies.

In Case You Missed It

I have worked a bit more on updating the notebooks in ML.recipes.

On Socials

I shared the Annotated Transformer, which was very popular. And “Want to do a PhD in ML? Just know stuff!” was predictably popular on Linkedin. And this F-string cheatsheet was also making rounds.

Python Deadlines

I found new Calls for Participation for Kiwi Python, PyCon Colombia, and EuroPython. Special shout-out for EARL, who want to try and get more Pythonistas.

Scipy US and DjangoCon Europe deadlines are coming up!

Machine Learning Insights

Last week, I asked, What strategies are most effective for balancing bias and variance in small dataset machine learning models? and here’s the gist of it:

Balancing bias and variance, especially in small dataset machine learning models, is crucial to ensure good generalisation of the model to unseen data. Here are some strategies that can be effective:

- Simplifying the Model: Start with simple models to avoid high variance. Complex models can easily overfit a small dataset. For instance, linear models or small decision trees can be less prone to overfitting compared to deep neural networks.

- Regularisation: Implement regularisation techniques such as L1 (Lasso) and L2 (Ridge) regularisation. These techniques add a penalty on the size of the coefficients to the loss function controlling overfitting.

- Resampling Techniques: Employ resampling techniques such as bootstrapping to increase the diversity of training data. This can help make the model more robust and less sensitive to the noise in the data.

- Ensemble Methods: Combine multiple models to reduce variance without substantially increasing bias. Techniques like bagging (Bootstrap Aggregating) and boosting can be particularly effective. For instance, Random Forests use bagging to create an ensemble of decision trees trained on different subsets of data, reducing the overall variance.

- Feature Selection: Carefully select features to reduce the number of input variables. This can help mitigate the curse of dimensionality and reduce overfitting by eliminating irrelevant or redundant features.

- Data Augmentation: In some cases, especially with images, generating new training samples via data augmentation can be effective. Techniques might include cropping, rotating, or flipping images or using synonyms and sentence paraphrasing for text data to increase the effective size of the dataset.

- Domain-specific Techniques: Incorporate domain knowledge to preprocess data or engineer features that are more informative and less noisy. In meteorology, for instance, understanding the underlying physical processes can help select relevant features and reduce the model’s complexity to create better forecasts.

- Early Stopping: When using iterative models like gradient boosting or neural networks, early stopping can prevent overfitting by halting the training process when the performance on a validation set starts to deteriorate.

- Bayesian Techniques: Implement Bayesian models or regularisation, which incorporate prior knowledge into the model. This can be particularly useful in small datasets where the prior can significantly inform the learning process.

Balancing bias and variance is about finding the right model complexity that captures the underlying patterns in the data without being swayed by the noise. The choice of strategy might depend on the specific characteristics of the dataset and the problem at hand. In practice, it often involves trying multiple approaches and selecting the one that offers the best trade-off.

Got this from a friend? Subscribe here!

Data Stories

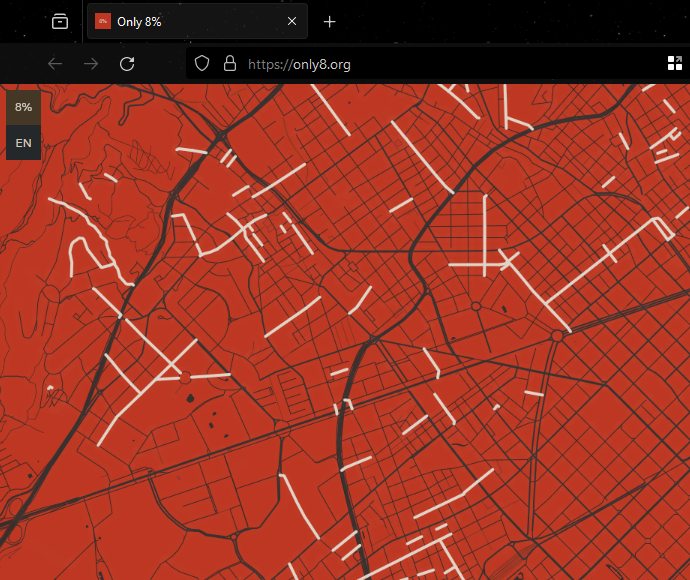

There’s not much to say about Only 8%.

It’s a beautiful exploration of the streets of Barcelona.

Scroll for yourselves!

Source: Only-8

Question of the Week

- What strategies are most effective for balancing bias and variance in small dataset machine learning models?

Post them on Mastodon and Tag me. I’d love to see what you come up with. Then I can include them in the next issue!

Tidbits from the Web

- I love “spurious correlations”, but what if we have chatGPT explain the causality?

- This song performance has me in pieces every time I see it

- And this rendition of Bohemian Rhapsody is incredibly creative, too

Add a comment: