🐝 An indecisive bee should be a “Maybe”

Late to the Party 🎉 is about insights into real-world AI without the hype.

Hello internet,

it's been a week! I have been polishing my new projects to give them their best, but first, some machine learning!

The Latest Fashion

- This ML training handbook by Google is quite handy, albeit not the newest.

- Tips for structuring your research group's Python packages

- Finally, a proper stable diffusion fork for Macs!

Got this from a friend? Subscribe here!

My Current Obsession

What a week! It's the Latent Space community launch. My ECMWF MOOC segment went live, and I worked on a lot of backend stuff for my side projects!

First things first! I want to launch a small inclusive (queer, feminist & ND) community for machine learning folks: The Latent Space. Many of you know that I'm always looking to make the spaces I'm in more inclusive. I'm pretty open with being queer and neurodivergent, and I try my best to incorporate feminist and black-inclusive values. I want this to be a space outside the yelling match on Twitter and Linkedin. We'll let this grow organically for now. I want to start out with a small Accountability Circle to grow together and a fun little Journal Club event next week:

Join the latent.club

My segment on deep learning for the ECMWF MOOC in weather and climate prediction went live. On Wednesday, I held a webinar to answer the questions of participants. Six minutes into the webinar, my internet went out. I was able to save it, and finally, upgrading my data plan for emergencies paid off. I held the entire thing from my phone, which is a bummer since I have all this nice equipment on my PC, but whatever… Got excellent ratings despite making everyone wait.

I have been doing a lot of behind-the-scenes things on my websites and a lot of writing I'll share further down. For the curious, I slimmed out the javascript from my website to only load bundles it needs explicitly for each page. Hope that speeds up the page a bit. Then I figured out that Bing shut down its "notification service" for website changes and instead now uses a service called IndexNow, which is much less convenient to use. But CloudFlare actually helps you submit things (and Bing recommends using CloudFlare for this, too!). I also added some search-engine stuff to ml.recipes and data-science-gui.de, and pythondeadlin.es. I want these tools to become maximally useful, so maybe this helps searchers on Google find my stuff!

In addition, I also worked on a huge Resource section for pythondeadlin.es. I felt like a missed opportunity just to have a list of Python conferences and not give guidance to newer community members on how to submit CfPs and how to write a good CfP at that.

I'm also trying out Plausible Analytics as a privacy-preserving analytics tool to possibly replace Google. But it would be another expense on many things that I don't make any money from, so I'm doing the test trial for now. And so far, I even like it much better than Google Analytics. Might be worth it!

Thing I Like

I upgraded my audio equipment, and the Roland Bridge Cast is such a fun new toy. I was able to split my computer's audio streams into different parts. It's absolutely unnecessary for my use case because I'm clearly not a streamer. But it's definitely a fun toy.

Hot off the Press

I learned how to add Sitemaps to Jupyter books. They're considered good practice for search engine optimization, so I'm happy I got those in!

I also learned I can tell Windows natively to put Spotify on my speakers or headphones without any extra software! That was a pleasant surprise.

Then I tried to write a bunch of valuable guides for pythondeadlin.es in the resource section:

Starting with "How to get to talk at Python conferences?" but also how to create a fantastic presentation. When I got started, I always wondered what a CfP even is and how you write a proposal. It helps to have a good idea about your target audience. And, of course, there are some common pitfalls. And this one's a bit more general, but maybe some folks need help with "what should my Python conference even be about?".

In Case You Missed It

My article about choosing a laptop for machine learning has been making the rounds again.

Machine Learning Insights

Last week I asked, < Q from last week >, and here’s the gist of it:

One-hot encoding is one of the techniques used to represent categorical variables in a way that machine learning algorithms can understand.

Categorical variables represent different categories or groups, such as weather conditions (sunny, cloudy, rainy) or countries (USA, Canada, Mexico). For machine learning algorithms to work with categorical variables, they need to be converted into numerical values, and One-hot encoding is a way to do this conversion.

In one-hot encoding, each category is represented as a binary vector where only one element is "hot" (i.e., has a value of 1). The rest are "cold" (i.e., have a value of 0). For example, suppose we have a dataset with weather conditions as a categorical variable. We can use one-hot encoding to represent these conditions as follows:

- Sunny: 1 0 0

- Cloudy: 0 1 0

- Rainy: 0 0 1

The advantage of one-hot encoding is that it allows the machine learning algorithm to easily distinguish between different categories without imposing any arbitrary order or ranking on them. For example, if we were to represent the weather conditions as numerical values (e.g., Sunny=1, Cloudy=2, Rainy=3), the algorithm would assume that Rainy is greater than Sunny and Cloudy, which is not the case.

However, one potential disadvantage of one-hot encoding is that it can increase the dimensionality of the dataset, which can make the algorithm more complex and require more computational resources. For example, if we have ten different categories, one-hot encoding would result in a vector of length 10 for each observation, which can quickly become unwieldy for large datasets.

In conclusion, one-hot encoding is a helpful technique for representing categorical variables in a way that machine learning algorithms can understand. Its main advantage is that it allows for a straightforward representation of categorical data without imposing any order or ranking. Nevertheless, it can increase the dimensionality of the dataset and make the algorithm more complex.

Data Stories

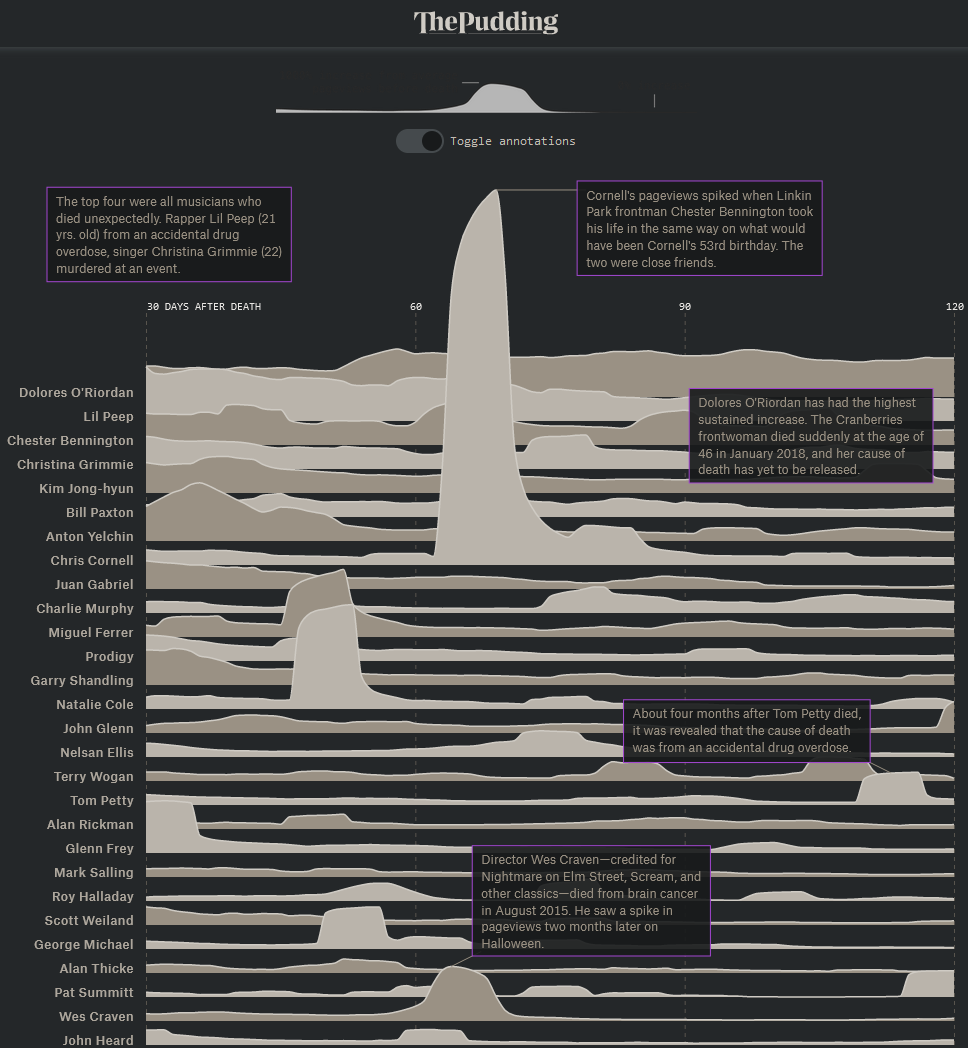

When celebrities die, things happen on the internet.

And Wikipedia sees a considerable effect of it. People are looking up that person and adjacent projects. But how big is this effect?

The Pudding has made a fascinating interactive visualization of the Life After Death on Wikipedia.

Source: Pudding.cool

Question of the Week

- What is the kernel trick, and why is it so impactful?

Post them on Mastodon and Tag me. I'd love to see what you come up with. Then I can include them in the next issue!

Job Corner

The ECMWF is hiring four (Senior) Scientists for Machine Learning!

If you have experience applying big neural networks, preferably to physical problems, you may find a great place with us!

Salaries are posted as well!

Check it out here: https://jobs.ecmwf.int/displayjob.aspx?jobid=134

Tidbits from the Web

- If you work in computer vision, I can recommend this alternate Lena.

- Apparently, fake buildings are a thing?!

- 8 hard lessons learned from getting laid off.

Add a comment: